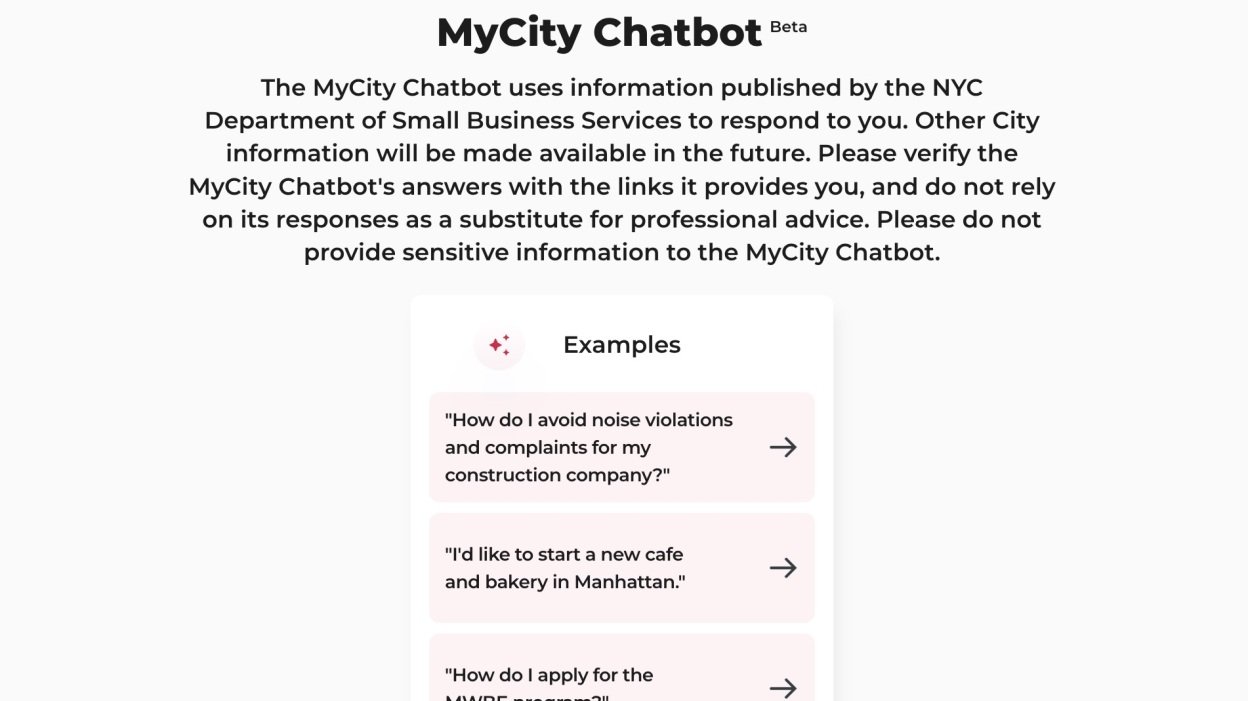

New York City’s “MyCity” artificial intelligence chatbot got off to a rocky start. The city government launched the technology five months ago in an attempt to help residents interested in running a business in New York find useful information.

While bots will happily answer your questions with seemingly legitimate answers, an investigation by The Markup found that bots tell a lot of lies. For example, when asked if employers could charge employees tips, the bot said yes, even though bosses are legally prohibited from charging employees tips. When asked whether buildings were required to charge Section 8 vouchers, the robot responded in the negative, although landlords cannot discriminate based on a potential tenant's source of income. When asked if you could make your store cashless, Bot said, go ahead, but in fact, New York City has banned cashless stores since early 2020 — it said, “There are no regulatory requirements in New York City Businesses accept cash as a form of payment” which is all bullshit.

To its credit, the site does warn users not to rely solely on chatbot responses as a substitute for professional advice, and to verify any statements through the links provided. The problem is, some answers don't contain links at all, which makes it harder to check whether what the bot is saying is true and accurate. This begs the question: Who is this technology suitable for?

AI has a tendency to hallucinate

For anyone who has been paying attention to the latest developments in artificial intelligence, this story will not come as a shock. It turns out that chatbots sometimes just make things up. This is called the illusion: an AI model trained to respond to a user query will confidently come up with an answer based on the training data. Because these networks are so complex, it's hard to know exactly when or why a bot will choose to make up a story in response to your question, but it happens often.

It’s not New York City’s fault that the city’s chatbot is hallucinating that you can force employees to give up tips: Their bot runs on Microsoft’s Azure AI, a common AI platform used by AT&T, Reddit, and Volkswagen and other companies use it to provide various services. The city may have paid to use Microsoft's artificial intelligence technology to power its chatbot in a sincere effort to help New Yorkers interested in starting a business, only to find that the bot gave egregiously incorrect answers to important questions.

When will the hallucinations stop?

These unfortunate situations may soon be a thing of the past: Microsoft has a new security system to catch and protect customers from the dark side of artificial intelligence. In addition to tools that help stop hackers from using AI as malicious tools and assess potential security vulnerabilities within AI platforms, Microsoft is also introducing Ground Detection, which can monitor for potential hallucinations and intervene if necessary. ("Ungrounded" is another term for hallucination.)

When Microsoft's system detects a possible hallucination, it could let customers compare the current version of the AI to a version that existed before deployment; point out hallucination statements and then fact-check or make "knowledge base edits," which could allow You edit the base training set to eliminate problems; rewrite hallucination statements before sending them to users; or evaluate the quality of synthetic training data before using it to generate new synthetic data.

Microsoft's new system runs on a separate LLM called Natural Language Inference (NLI), which continuously evaluates AI claims based on source data. Of course, since the system that fact-checks an LLM is itself an LLM, shouldn't NLI be able to fantasize about its own analysis? (Maybe! I’m kidding, I’m kidding. Kind of.)

This could mean that organizations like the City of New York, which uses Azure AI to power their products, could have an LLM on the case that eliminates hallucinations in real time. Maybe when the MyCity chatbot tries to say that you can run a cashless business in New York, NLI will quickly correct that statement so what you as the end user see will be a true, accurate answer.

Microsoft just launched the new software, so it's unclear how effective it will be. But for now, if you're a New Yorker, or anyone using a government-run chatbot to find answers to legitimate questions, you should take these answers with a grain of salt. I don’t think “MyCity Chatbot Says I Can!” will hold up in court.