Large language models (LLMs) are used to train artificial intelligence (AI) to understand and generate text like humans. Learn more about large-scale language models, their popular applications, and how the LLM differs from other computer learning models.

Large language models are deep learning algorithms designed to train artificial intelligence programs. LLM is a transformer model or neural network that looks for patterns (such as words in sentences) in sequential data sets to establish context. When presented with a text prompt, the algorithm outputs an appropriate, human-like response.

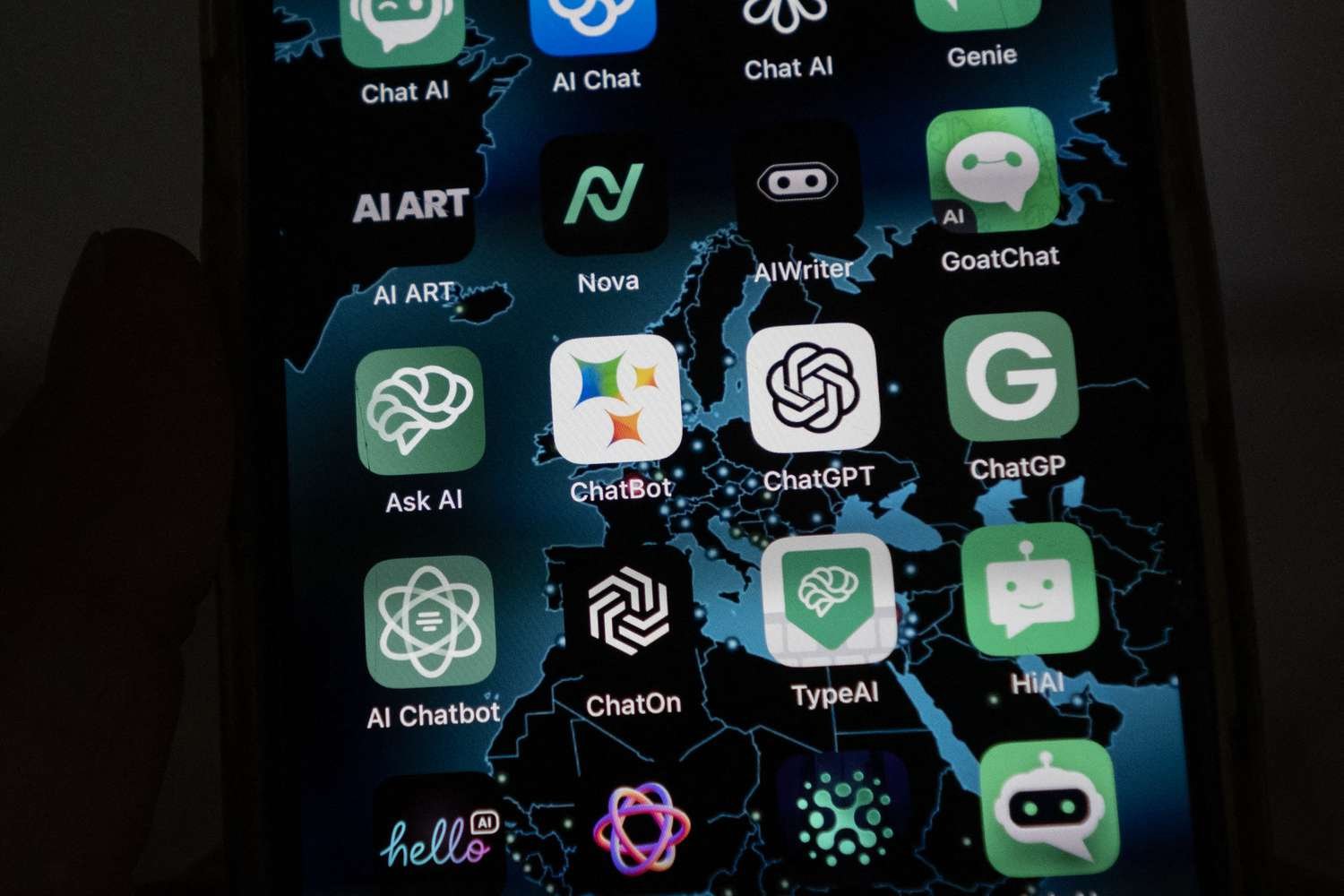

The most popular application for LLM is artificial intelligence chatbots. Examples of large language models include GPT-4o (powering the popular ChatGPT) and PaLM2 (the algorithm behind Google Gemini). They certainly live up to their name: LLMs are often too large to run on a single computer, so they run as web services rather than stand-alone programs.

Transformer models are composed of multiple layers that can be stacked to create increasingly complex algorithms. LLM, in particular, relies on two key features of the Transformer model: positional encoding and self-attention.

Positional encoding allows models to analyze text non-sequentially to identify patterns. Self-attention assigns a weight to each input to determine its importance compared to the rest of the data. This way, the model can pick out the most important parts of a large amount of text.

Through extensive unsupervised learning, LLM can reliably predict the next word in a sentence based on the grammatical rules of human language. Grammar rules are not pre-programmed into large language models; the algorithm infers grammar as it reads the text.

While virtual assistants like Alexa and Siri can respond to a series of predetermined commands with pre-programmed answers, the LLM analyzes large amounts of text input (whole paragraphs or even longer) and delivers unique, coherent and creative responses .

With the help of an LL.M., artificial intelligence programs can perform the following tasks:

- Content generation : writing stories, poetry, scripts and marketing materials

- Summary : Write meeting notes or transcripts

- Translation : Interpretation between human language and computer language

- Classification : Generate lists and analyze the tone of the text (positive, negative or neutral)

While AI chatbots are particularly helpful in customer service, LLMs have promising applications in fields ranging from engineering to healthcare. For example, an LL.M. can analyze research papers, health records, and other data to develop new medical treatments.

Because LLM algorithms learn language by identifying relationships between words, they are not limited to one human language. Likewise, the LL.M. does not require training in any specific skills. Therefore, the LL.M. has a lot of flexibility in understanding the nuances of human language.

The LL.M., on the other hand, requires a lot of test data to be effective. For example, GPT-4 was trained using books, articles, and other texts available on the Internet before being released to the public.

Training for an LL.M. requires a lot of time and computing resources, resulting in high electricity bills. Although the learning process is unsupervised, human expertise is still required to develop and maintain the LL.M. The large amounts of data required to train LL.M.s also pose challenges, particularly when dealing with sensitive information such as health or financial data.